Index

NIT 067- BIG DATA

1.

UNDERSTANDING BIG DATA

1.1.

What is big

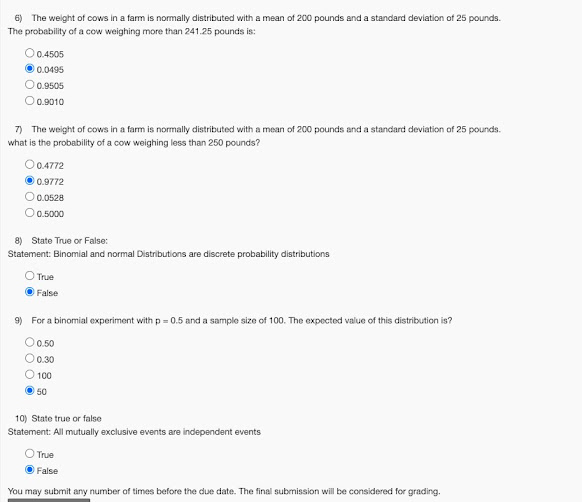

data,

1.2.

why big

data,

1. UNDERSTANDING

BIG DATA

1.1 What

is big data:

Big

data is data that exceeds the processing capacity of conventional database

systems. The data is too big, moves too fast, or does not fit the structures of

traditional database architectures. In other words, Big data is an

all-encompassing term for any collection of data sets so large and complex that

it becomes difficult to process using on-hand data management tools or

traditional data processing applications. To gain value from this data, you

must choose an alternative way to process it. Big Data is the next generation

of data warehousing and business analytics and is poised to deliver top line

revenues cost efficiently for enterprises. Big data is a popular term used to

describe the exponential growth and availability of data, both structured and

unstructured. Every day, we create 2.5 quintillion bytes of data — so much that

90% of the data in the world today has been created in the last two years

alone. This data comes from everywhere: sensors used to gather climate

information, posts to social media sites, digital pictures and videos, purchase

transaction records, and cell phone GPS signals to name a few. This data is big

data.

Definition: Big data usually includes data sets with

sizes beyond the ability of commonly used software tools to capture, create,

manage, and process the data within a tolerable elapsed time Big data is

high-volume, high-velocity and high-variety information assets that demand

cost-effective, innovative forms of information processing for enhanced insight

and decision-making

Big Data is a collection of large

datasets that cannot be processed using traditional computing techniques. It is

not a single technique or a tool, rather it involves many areas of business and

technology.

Increasingly,

organizations today are facing more and more Big Data challenges. They have

access to a wealth of information, but they don’t know how to get value out of

it because it is sitting in its most raw form or in a semistructured or

unstructured format; and as a result, they don’t even know whether it’s worth

keeping (or even able to keep it for that matter).

What Comes Under Big Data?

Big data

involves the data produced by different devices and applications. Given below are

some of the fields that come under the umbrella of Big Data.

Ø Black Box Data: It is a component of helicopter,

airplanes, and jets, etc. It captures voices of the flight crew, recordings of

microphones and earphones, and the performance information of the aircraft.

Ø Social Media Data: Social media such as Facebook

and Twitter hold information and the views posted by millions of people across

the globe. Fig .1

Ø Stock Exchange Data: The stock exchange data holds

information about the ‘buy’ and ‘sell’ decisions made on a share of different

companies made by the customers.

Ø Power Grid Data: The power grid data holds

information consumed by a particular node with respect to a base station.

Ø Transport Data: Transport data includes model,

capacity, distance and availability of a vehicle.

Ø Search Engine Data: Search engines retrieve lots of

data from different databases.

Ø

Thus Big Data includes huge volume, high velocity,

and extensible variety of data. The data in it will be of three types.

Structured data: Relational data.

Semi Structured data: XML data.

Unstructured data: Word, PDF, Text, Media Logs.

Characteristics of Big Data

Three characteristics define Big Data: volume, variety, and velocity (as

shown in Figure ).

Together, these characteristics define

what we refer to as “Big Data.”

Can

There Be Enough? The Volume of Data

The sheer volume of data being stored today is exploding. In the year 2000,

800,000 petabytes (PB) of data were stored in the world. Of course, a lot of

the data that’s being created today isn’t analyzed at all and that’s another

problem

Bigshot Companies are trying to address this

with their respective tools. We expect this number to reach 35 zettabytes (ZB)

by 2020. Twitter alone generates more than 7 terabytes (TB) of data every day,

Facebook 10 TB, and some enterprises generate terabytes of data every hour of

every day of the year. It’s no longer unheard of for individual enterprises to

have storage clusters holding petabytes of data. We’re going to stop right

there with the factoids: Truth is, these estimates will be out of date by the

time you read this book, and they’ll be further out of date by the time you

bestow your great knowledge of data growth rates on your friends and families

when you’re done reading this book.

When you stop and think about it, it’s

little wonder we’re drowning in data. If we can track and record something, we

typically do. (And notice we didn’t mention the analysis of this stored data,

which is going to become a theme of Big Data—the newfound utilization of data

we track and don’t use for decision making.)

As implied by the term “Big Data,”

organizations are facing massive volumes of data. Organizations that don’t know

how to manage this data are overwhelmed by it. But the opportunity exists, with

the right technology platform, to analyze almost all of the data (or at least

more of it by identifying the data that’s useful to you) to gain a better

understanding of your business, your customers, and the marketplace. And this

leads to the current conundrum

facing today’s businesses across all

industries. As the amount of data available to the enterprise is on the rise,

the percent of data it can process, understand, and analyze is on the decline,

thereby creating the blind zone. What’s in that blind zone? You don’t know: it

might be something great, or may be nothing at all, but the “don’t know” is the

problem (or the opportunity, depending how you look at it).

The conversation about data volumes has

changed from terabytes to petabytes with an inevitable shift to zettabytes, and

all this data can’t be stored in your traditional systems for reasons that

we’ll discuss in this chapter and others.

Variety Is the Spice of Life

The volume associated with the Big Data

phenomena brings along new challenges for data centers trying to deal with it:

its variety. With the explosion of sensors, and smart devices, as well as

social collaboration technologies, data in an enterprise has become complex,

because it includes not only traditional relational data, but also raw,

semistructured, and unstructured data from web pages, web log files (including

click-stream data), search indexes, social media forums, e-mail, documents,

sensor data from active and passive systems, and so on. What’s more,

traditional systems can struggle to store and perform the required analytics to

gain understanding from the contents of these logs because much of the

information being generated doesn’t lend itself to traditional database

technologies. In our experience, although some companies are moving down the

path, by and large, most are just beginning to understand the opportunities of

Big Data (and what’s at stake if it’s not considered).

Quite simply, variety represents all types of data—a fundamental

shift in analysis requirements from traditional structured data to include raw,

semistructured, and unstructured data as part of the decision-making and

insight process. Traditional analytic platforms can’t handle variety. However,

an organization’s success will rely on its ability to draw insights from the

various kinds of data available to it, which includes both traditional and

nontraditional.

When we look back at our database careers,

sometimes it’s humbling to see that we spent more of our time on just 20

percent of the data: the relational kind that’s neatly formatted and fits ever

so nicely into our strict schemas. But the truth of the matter is that 80

percent of the world’s data (and more and more of this data is responsible for

setting new velocity and volume records) is unstructured, or semistructured at

best. If you look at a Twitter feed, you’ll see structure in its JSON

format—but the actual text is not structured, and understanding that can be

rewarding. Video and picture images aren’t easily or efficiently stored in a

relational database, certain event information can dynamically change (such as

weather patterns), which isn’t well suited for strict schemas, and more.

To capitalize on the Big Data opportunity,

enterprises must be able to analyze all types

of data, both relational and nonrelational: text,

sensor data, audio, video, transactional,

and more.

How Fast Is Fast? The Velocity of Data

Just as the sheer volume and variety of

data we collect and store has changed, so, too, has the velocity at which it is generated and needs to be

handled. A conventional understanding of velocity typically considers how

quickly the data is arriving and stored, and its associated rates of retrieval.

While managing all of that quickly is good—and the volumes of data that we are

looking at are a consequence of how quick the data arrives—we believe the idea

of velocity is actually something far more compelling than these conventional

definitions.

To accommodate velocity, a new way of

thinking about a problem must start at the inception point of the data. Rather

than confining the idea of velocity to the growth rates associated with your

data repositories, we suggest you apply this definition to data in motion: The speed at which the data is flowing. After

all, we’re in agreement that today’s enterprises are dealing with petabytes of

data instead of terabytes, and the increase in RFID sensors and other

information streams has led to a constant flow of data at a pace that has made

it impossible for traditional systems to handle.

Sometimes, getting an edge over your

competition can mean identifying a trend, problem, or opportunity only seconds,

or even microseconds, before someone else. In addition, more and more of the

data being produced today has a very short shelf-life, so organizations must be

able to analyze this data in near real time if they hope to find insights in

this data.

Dealing effectively with Big Data requires

that you perform analytics against the volume and variety of data while it is still in motion, not just after it is at rest.

1.2 Why

big data

Big Data solutions are ideal for analyzing

not only raw structured data, but semi structured and unstructured data from a

wide variety of sources.

• Big Data solutions are ideal when all,

or most, of the data needs to be analyzed versus a sample of the data; or a

sampling of data isn’t nearly as effective as a larger set of data from which

to derive analysis.

• Big Data solutions are ideal for

iterative and exploratory analysis when business measures on data are not

predetermined.

·

Is

the reciprocal of the traditional analysis paradigm appropriate for the business

task at hand? Better yet, can you see a Big Data platform complementing what

you currently have in place for analysis and achieving synergy with existing

solutions for better business outcomes? For example, typically, data bound for

the analytic warehouse has to be cleansed, documented, and trusted before it’s

neatly placed into a strict warehouse schema (and, of course, if it can’t fit

into a traditional row and column format, it can’t even get to the warehouse in

most cases). In contrast, a Big Data solution is not only going to leverage data

not typically suitable for a traditional warehouse environment, and in massive

amounts of volume, but it’s going to give up some of the formalities and

“strictness” of the data. The benefit is that you can preserve the fidelity of

data and gain access to mountains of information for exploration and discovery

of business insights before

running it through the due diligence that

you’re accustomed to; the data that can be included as a participant of a

cyclic system, enriching the models in the warehouse. • Big Data is well suited

for solving information challenges that don’t natively fit within a traditional

relational database approach for handling the problem at hand.

IT

for IT Log Analytics

Log analytics is a common use case for an

inaugural Big Data project. We like to refer to all those logs and trace data

that are generated by the operation of your IT solutions as data exhaust. Enterprises have lots of data exhaust,

and it’s pretty much a pollutant if it’s just left around for a couple of hours

or days in case of emergency and simply purged. Why? Because we believe data

exhaust has concentrated value, and IT shops need to figure out a way to store

and extract value from it. Some of the value derived from data exhaust is

obvious and has been transformed into value-added click-stream data that

records every gesture, click, and movement made on a web site.

The

Fraud Detection Pattern

Fraud detection comes up a lot in the

financial services vertical, but if you look around, you’ll find it in any sort

of claims- or transaction-based environment (online auctions, insurance claims,

underwriting entities, and so on). Pretty much anywhere some sort of financial

transaction is involved presents a potential for misuse and the ubiquitous

specter of fraud. If you leverage a Big Data platform, you have the opportunity

to do more than you’ve ever done before to identify it or, better yet, stop it.

They

Said What? The Social Media Pattern

Perhaps the most talked about Big Data

usage pattern is social media and customer sentiment. You can use Big Data to

figure out what customers are saying about you (and perhaps what they are

saying about your competition); furthermore, you can use this newly found

insight to figure out how this sentiment impacts the decisions you’re making

and the way your company engages. More specifically, you can determine how

sentiment is impacting sales, the effectiveness or receptiveness of your

marketing campaigns, the accuracy of your marketing mix (product, price,

promotion, and placement), and so on.

Social media analytics is a pretty hot

topic, so hot in fact that IBM has built a solution specifically to accelerate

your use of it: Cognos Consumer Insights (CCI). It’s a point solution that runs

on BigInsights and it’s quite good at what it does. CCI can tell you what

people are saying, how topics are trending in social media, and all sorts of

things that affect your business, all packed into a rich visualization engine.

Big

Data and the Energy Sector

The energy sector provides many Big Data

use case challenges in how to deal with the massive volumes of sensor data from

remote installations. Many companies are using only a fraction of the data

being collected, because they lack the infrastructure to store or analyze the

available scale of data. Take for example a typical oil drilling platform that

can have 20,000 to 40,000 sensors on board. All of these sensors are streaming

data about the health of the oil rig, quality of operations, and so on. Not every

sensor is actively broadcasting at all times, but some are reporting back many

times per second. Now take a guess at what percentage of those sensors are

actively utilized. If you’re thinking in the 10 percent range (or even 5

percent), you’re either a great guesser or you’re getting the recurring theme

for Big Data that spans industry and use cases: clients aren’t using all of the

data that’s available to them in their decision-making process. Of course, when

it comes to energy data (or any data for that matter) collection rates, it

really begs the question, “If you’ve bothered to instrument the user or device

or rig, in theory, you’ve done it on

purpose, so why are you not capturing and leveraging the information you are

collecting?”

Benefits

of Big Data

Using the information kept in the social network

like Facebook, the marketing agencies are learning about the response for their

campaigns, promotions, and other advertising mediums.

Using the information in the social media like

preferences and product perception of their consumers, product companies and

retail organizations are planning their production.

Using the data regarding the previous medical

history of patients, hospitals are providing better and quick service.

Why Big data?

1. Understanding and Targeting

Customers

This is one of the biggest and most

publicized areas of big data use today. Here, big data is used to better

understand customers and their behaviors and preferences.

Companies are keen to expand their

traditional data sets with social media data, browser logs as well as text

analytics and sensor data to get a more complete picture of their customers.

The big objective, in many cases, is to create predictive models. You might

remember the example of U.S. retailer Target, who is now able to very

accurately predict when one of their customers will expect a baby. Using big

data, Telecom companies can now better predict customer churn; Wal-Mart can

predict what products will sell, and car insurance companies understand how

well their customers actually drive. Even government election campaigns can be

optimized using big data analytics.

2. Understanding and Optimizing

Business Processes

Big data is also increasingly used to

optimize business processes. Retailers are able to optimize their stock based

on predictions generated from social media data, web search trends and weather

forecasts. One particular business process that is seeing a lot of big data

analytics is supply chain or delivery route optimization. Here, geographic

positioning and radio frequency identification sensors are used to track goods

or delivery vehicles and optimize routes by integrating live traffic data, etc.

HR business processes are also being improved using big data analytics. This

includes the optimization of talent acquisition – Moneyball style, as well as

the measurement of company culture and staff engagement using big data tools

3. Personal Quantification and

Performance Optimization

Big data is not just for companies and

governments but also for all of us individually. We can now benefit from the

data generated from wearable devices such as smart watches or smart bracelets.

Take the Up band from Jawbone as an example: the armband collects data on our

calorie consumption, activity levels, and our sleep patterns. While it gives

individuals rich insights, the real value is in analyzing the collective data.

In Jawbone’s case, the company now collects 60 years worth of sleep data every

night. Analyzing such volumes of data will bring entirely new insights that it

can feed back to individual users. The other area where we benefit from big

data analytics is finding love - online this is. Most online dating sites apply

big data tools and algorithms to find us the most appropriate matches.

4. Improving Healthcare and Public

Health

The computing power of big data analytics

enables us to decode entire DNA strings in minutes and will allow us to find

new cures and better understand and predict disease patterns. Just think of

what happens when all the individual data from smart watches and wearable

devices can be used to apply it to millions of people and their various diseases.

The clinical trials of the future won’t be limited by small sample sizes but could

potentially include everyone! Big data techniques are already being used to monitor

babies in a specialist premature and sick baby unit. By recording and analyzing

every heart beat and breathing pattern of every baby, the unit was able to

develop algorithms that can now predict infections 24 hours before any physical

symptoms appear. That way, the team can intervene early and save fragile babies

in an environment where every hour counts. What’s more, big data analytics

allow us to monitor and predict the developments of epidemics and disease

outbreaks. Integrating data from medical records with social media analytics

enables us to monitor flu outbreaks in real-time, simply by listening to what

people are saying, i.e. “Feeling rubbish today - in bed with a cold”.

5. Improving Sports Performance

Most elite sports have now embraced big

data analytics. We have the IBM SlamTracker tool for tennis tournaments; we use

video analytics that track the performance of every player in a football or

baseball game, and sensor technology in sports equipment such as basket balls

or golf clubs allows us to get feedback (via smart phones and cloud servers) on

our game and how to improve it. Many elite sports teams also track athletes outside

of the sporting environment – using smart technology to track nutrition and sleep,

as well as social media conversations to monitor emotional wellbeing.

6. Improving Science and Research

Science and research is currently being

transformed by the new possibil ities big data brings. Take, for example, CERN,

the Swiss nuclear physics lab with its Large Hadron Collider, the world’s

largest and most powerful particle accelerator. Experiments to unlock the

secrets of our universe – how it started and works - generate huge amounts of

data. The CERN data center has 65,000 processors to analyze its 30 petabytes of

data. However, it uses the computing powers of thousands of computers

distributed across 150 data centers worldwide to analyze the data. Such

computing powers can be leveraged to transform so many other areas of science

and research.

7. Optimizing Machine and Device

Performance

Big data analytics help machines and

devices become smarter and more autonomous. For example, big data tools are

used to operate Google’s self-driving car. The Toyota Prius is fitted with

cameras, GPS as well as powerful computers and sensors to safely drive on the

road without the intervention of human beings. Big data tools are also used to

optimize energy grids using data from smart meters. We can even use big data

tools to optimize the performance of computers and data warehouses.

8. Improving Security and Law

Enforcement.

Big data is applied heavily in improving

security and enabling law enforcement. I am sure you are aware of the

revelations that the National Security Agency (NSA) in the U.S. uses big data

analytics to foil terrorist plots (and maybe spy on us). Others use big data

techniques to detect and prevent cyber attacks. Police forces use big data

tools to catch criminals and even predict criminal activity and credit card

companies use big data use it to detect fraudulent transactions.

9. Improving and Optimizing Cities

and Countries

Big data is used to improve many aspects

of our cities and countries. For example, it allows cities to optimize traffic

flows based on real time traffic information as well as social media and

weather data. A number of cities are currently piloting big data analytics with

the aim of turning themselves into Smart Cities, where the transport infrastructure

and utility processes are all joined up. Where a bus would wait for a delayed

train and where traffic signals predict traffic volumes and operate to minimize

jams.

10. Financial Trading

My final category of big data application

comes from financial trading. High-Frequency Trading (HFT) is an area where big

data finds a lot of use today. Here, big data algorithms are used to make

trading decisions. Today, the majority of equity trading now takes place via

data algorithms that increasingly take into account signals from social media

networks and news websites to make, buy and sell decisions in split seconds.

Operational

Big Data

These include systems like MongoDB that provide

operational capabilities for real-time, interactive workloads where data is

primarily captured and stored.

NoSQL Big Data systems are designed to take

advantage of new cloud computing architectures that have emerged over the past

decade to allow massive computations to be run inexpensively and efficiently.

This makes operational big data workloads much

easier to manage, cheaper, and faster to implement.

Some NoSQL systems can provide insights into

patterns and trends based on real-time data with minimal coding and without the

need for data scientists and additional infrastructure.

let ’s breakdown the

three pinnacle stages in the evolution of data systems:

■ Dependent (Early Days). Data systems were fairly new and users didn’t know

quite know what they wanted. IT assumed that “Build it and they shall come.”

■ Independent (Recent Years). Users understood what an analytical platform was

and worked together with IT to define the business needs and approach for

deriving insights for their firm.

■ Interdependent (Big Data Era). Interactional stage between

various companies, creating more social collaboration beyond your firm’s walls.